Thursday, April 21, 2011

Poster Finished!

Thursday, April 14, 2011

Nothing Big Today

Friday, April 8, 2011

Thinking Out Loud

Thursday, April 7, 2011

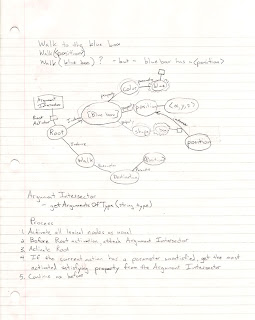

Parameters

The Beta review went well. The main thing I want to get working next is parameters. This will allow the user to say "pick up the blue box", for example. Here, blue box is the parameter for the action "pick up". I've scanned in my current plan with a diagram. It took me a while to come up with the solution, so I haven't had time to implement it yet.

Thursday, March 31, 2011

Actions

My visit to CMU was great, but I've decided on the University of Rochester. Unfortunately I didn't have any time over the weekend to work on this, but I still managed to make some great progress.

My visit to CMU was great, but I've decided on the University of Rochester. Unfortunately I didn't have any time over the weekend to work on this, but I still managed to make some great progress.Wednesday, March 23, 2011

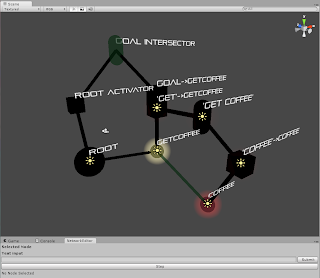

Progress Update and Beta Review

First, my latest update, in picture form. I have intersectors working, which are integral to the operation of the algorithm. In this picture, only the spherical nodes were created by me. There's the Root node, the yellow action node GetCoffee, and the red concept node Coffee. The rest are generated from the text input "Get coffee". The cubes are Activators - the Root Activator, the Goal Activator, and two Search Activators - one for "Get" and one for "Coffee". The Search Activations are red (the one for getcoffee is hidden by the yellow Goal Activation). Finally, there is the Query Intersector "Get Coffee" which is finding the intersection of the "get" and "coffee" intersections, and the Goal Intersector, which finds the path between the Root node and the Goal node.

First, my latest update, in picture form. I have intersectors working, which are integral to the operation of the algorithm. In this picture, only the spherical nodes were created by me. There's the Root node, the yellow action node GetCoffee, and the red concept node Coffee. The rest are generated from the text input "Get coffee". The cubes are Activators - the Root Activator, the Goal Activator, and two Search Activators - one for "Get" and one for "Coffee". The Search Activations are red (the one for getcoffee is hidden by the yellow Goal Activation). Finally, there is the Query Intersector "Get Coffee" which is finding the intersection of the "get" and "coffee" intersections, and the Goal Intersector, which finds the path between the Root node and the Goal node.Thursday, March 17, 2011

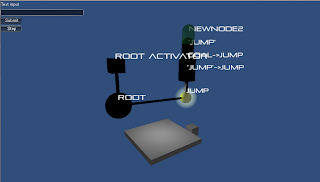

Activations in Unity

Wednesday, March 2, 2011

Not too much this week

Friday, February 25, 2011

Alpha Review

Thursday, February 17, 2011

Integration into Unity

So I feel like I've made some pretty good progress this week. I have ported all of my code into Unity, which wasn't a terrible ordeal. I just had to get rid of my constructors for all of my Nodes, make my Nodes into GameObjects, and replace my properties with public variables.

So I feel like I've made some pretty good progress this week. I have ported all of my code into Unity, which wasn't a terrible ordeal. I just had to get rid of my constructors for all of my Nodes, make my Nodes into GameObjects, and replace my properties with public variables. Someday :)

Someday :)Monday, February 14, 2011

This is why I need to make a GUI in Unity

Here you can see all the debug information for my system. The node labeled '0' is the Root node. (I forgot to add the edge from grounds to "get grounds", sorry).

Here you can see all the debug information for my system. The node labeled '0' is the Root node. (I forgot to add the edge from grounds to "get grounds", sorry). - Camera control to center on nodes and activations

- Visualization system to analyze activations (will probably use colored lights)

- Graph editing system (add/remove/edit nodes and edges)

Thursday, February 10, 2011

Basic Algorithm finished

I did some programming this weekend and my basic algorithm is working, with a few bugs. The algorithm is presented in the scan of my notes, except I don't have any AND gates yet (they require some special programming which theoretically works, but hasn't been tested).

I did some programming this weekend and my basic algorithm is working, with a few bugs. The algorithm is presented in the scan of my notes, except I don't have any AND gates yet (they require some special programming which theoretically works, but hasn't been tested).I can pass the input "make coffee" and it finds a path from the root to the goal node (which was activated by the command). I have the actual structure lying around here somewhere, I'll scan it later tomorrow.

Thursday, February 3, 2011

Test Experiment

Here you can see some of my notes. I'm envisioning a text environment to test my algorithm and some of the natural language features without going into Unity yet. The goal will be to obtain coffee in some manner.

Here you can see some of my notes. I'm envisioning a text environment to test my algorithm and some of the natural language features without going into Unity yet. The goal will be to obtain coffee in some manner. Saturday, January 29, 2011

Fleshing Out My Algorithm

Starting to write out some code helped ground my concepts a bit. The following is a more detailed description of the basic planning algorithm. It's starting to deviate from Spreading Activation because of the gates and because activation passes information as it goes. With these plans in place I'm going to go back to coding and make changes as I go.

Directed Graph – contains nodes and directed edges

Node – contains activations. Types of nodes are as follows:

- Concept – a Node that contains a concept, such as “color” or “coffee”

- Gate – a Node that takes in one or more activations and outputs activation

- Action – a Node that contains an action to be performed, as well as a cost function for that action

o Actions can be designated as goals

- Instance – a Node that is a clone of a concept with attributes able to be filled in

- Parameter slot – a Node to be filled in with the appropriate parameter from an activation

- Root – a generic Node that serves as the parent for all Instances in the current environment (working memory)

Edge – contains a weight to constrain search through spreading activation decay and a distance to localize inference about concepts

- Inhibitor/Activator – an Edge where activation directly affects a Node’s activation or cost function. Under this algorithm, inhibition/activation is only supported if at least one of the connected nodes is a conceptual node, since they would be activated or inhibited by a reasoning process or verbal input. For example, an inhibitor from an action node to other action nodes would make those possible actions less likely simply by considering the possibility of the inhibiting action, which is not desired. To encode behavior that makes other actions more difficult, we would have to store all possible paths and compare combinations of them to find the shortest path.

o Example: Assume a café is closed on Sundays (I never said it was a successful café). On Sundays, the current state of the environment would activate “Sunday”, which would activate the “Closed” state node of the café. “Closed” would inhibit the action “Buy coffee” that would usually be activated as a possible action under the goal of “Get coffee”.

- Goal generator – activation along this edge makes the target action node a goal, and initiates the spreading algorithm

- Conceptual Edges

o Is-A – an Edge that represents an IS-A relationship between two concepts

o Attribute – an Edge that represents that one concept is an attribute of another

o Instance-Of – an Edge that represents that one concept is an instance of a more abstract concept. Provides the link between long term memory (conceptual) and working memory (instance based)

- Parameter – the target node is a parameter

Parameters- Parameters are passed through spreading activation to fulfill action or concept nodes that accept them. Parameters can be instances or concepts. Preconditions can be dependent on a particular parameter, so that a node is only activated by an activation carrying the requisite parameters. Parameters are passed from one node to the next until they reach the end of the activation.

Activation – consists of an activation value and a source. Activation spreads along the graph to activate connected nodes, with a falloff dictated by edge weights. Different activations have different impedances for various edge types. High impedance means the activation will not spread easily over that edge, whereas low impedance means it will easily spread. Activation impedance is also affected by the existing activation of a Node – activated nodes will pass on activations more readily.

- Current State Activation – the activation with a source at the root state

o Travels along forward edges

o Low impedance edges:

§ Preconditions

§ Instances

o High impedance edges:

§ Attributes

- Goal Activation – the activation with a source at a goal state

o Travels along backward edges

o Low impedance edges:

§ Preconditions

§ Instances

o High impedance edges:

§ Attributes

- Knowledge Query Activation (asking about concepts)

o Travels bi-directionally

o Low impedance edges

§ Is-A

§ Attributes

o High impedance

§ Preconditions

§ Instances

Bi-directional information spreading algorithm:

A goal state is given as a command or a desired action, and a root state represents the current state of the environment. The algorithm will find a low cost sequence of actions that will satisfy the goal condition without an exhaustive search of all possibilities.

- Goal activation starts at the given goal nodes. This spreads across incident (backward) precondition edges and bi-directionally across conceptual edges and defines a goal set – the set of goals and preconditions that need to be completed by the agent.

- Current state activation starts at the root node. This spreads across forward precondition and inhibitor/activator edges and bi-directionally across conceptual edges.

- The series of strongest activated precondition node forms the sequence of actions to be taken by the agent. A backward greedy search from the goal state forms a graph consisting of nodes with the greatest activation (AND gates will traverse all incident paths). The actions will be performed according to a depth-first traversal starting from the root node. AND gates will stop the traversal until all paths have reached that gate.

Additional thoughts:

Looping – looping is important for actions that need to be repeated a number of times, as well as standing orders, such as, “Tell me if you see a red car” (thanks for reminding me Norm :) ). This can be controlled using a Loop Gate, with an input for activation, one output to direct the activation back to the beginning of the “block” (in this case an action node), and one output that activates when the loop is finished. Another possibility is to reroute activation back to a Goal Generator node. To keep repetitions reasonable, I would probably need a “refractory period” implemented using a delay between activations.

Language - I haven't mentioned much about language so far, but it's always in the back of my mind. To start, I'm going to use preplanned phrases to build the network and have the program ask about anything needed to complete a task. I hope later to use activation of concepts combined with semantic frames to improve the language aspect.

Thursday, January 27, 2011

Clarification of my Spreading Activation Algorithm

First of all, the spreading activation model I'm using is bi-directional. One activation from the source, which represents the current state of the environment and all possible actions from that state, and the goal, which is the command that should be fulfilled. The source activation travels along the outgoing edges, and the goal activation travels backward across incident edges. When they meet, I'll have a possible sequence of actions to go from the current state to the goal state. However, the first meet of the two activations would only be optimal if all the edges were equally weighted (they aren't in this case). That's complication #1, and so in my case the activation will continue until all of the activations have decayed past a certain threshold.

The next complication is differentiating between AND and OR when it comes to preconditions and other relationships. If we assign a cost to a particular node in the graph corresponding to an action, say, "Make coffee", the cost will be some combination of all the requirements. So we need to get water, get coffee grounds (or get a grinder and beans for purists), and turn the coffee maker on. If any one of these tasks is particularly difficult for some reason, then the entire task will be difficult - this is an AND grouping of preconditions. Furthermore, if there are many tasks to be done, the difficulty increases.

To represent this, I'm going to say that the activation of an AND gate is the multiplication of the incident activations. This creates the effect that many effortless actions are still effortless, but even a small number of difficult actions make the action difficult.

For OR, it's the opposite case. If "Get coffee" can be satisfied by either "Making coffee" or "Buying coffee", then it doesn't matter how difficult the more difficult task is, it only matters how easy the easy task is. We'll take the path of least resistance - therefore the activation of an OR gate is the highest of the incident edges.

Friday, January 21, 2011

Project Proposal

FinalProjectProposal