My visit to CMU was great, but I've decided on the University of Rochester. Unfortunately I didn't have any time over the weekend to work on this, but I still managed to make some great progress.

My visit to CMU was great, but I've decided on the University of Rochester. Unfortunately I didn't have any time over the weekend to work on this, but I still managed to make some great progress.First, my interface is considerably more robust (although some things will probably break as I add more features). You can save and load scenes without having to worry about losing information. This was kind of tricky because static variables, dictionaries, and hash sets can't be serialized by Unity, which means they won't be saved, and it also means certain things will break when you go from Edit mode to Play mode. I had to do a few workarounds to fix this. You can also delete nodes without messing anything up, but I haven't added a feature to delete edges yet (won't be hard).

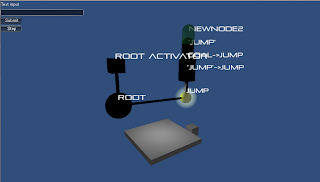

The major breakthrough for this week was getting actions working. You can assign a RigidBody object to the Root node's "Agent" parameter. This means that any actions that get performed in a sequence will be called on that RigidBody. Using this system means you theoretically could have multiple agents responding at the same time, but that would require multiple Root nodes, and I haven't thought of a good way of doing that yet.

The picture shows a block that has just been instructed to "Jump". It's a simple action, but there's a whole lot that went into it. I'll make some more complicated scenarios with different paths for the beta review.

I'm technically a little behind on my schedule, but now since my environments will be much easier to make, it works out that I'm doing ok.

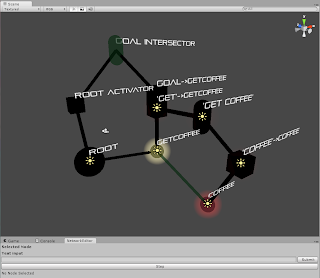

First, my latest update, in picture form. I have intersectors working, which are integral to the operation of the algorithm. In this picture, only the spherical nodes were created by me. There's the Root node, the yellow action node GetCoffee, and the red concept node Coffee. The rest are generated from the text input "Get coffee". The cubes are Activators - the Root Activator, the Goal Activator, and two Search Activators - one for "Get" and one for "Coffee". The Search Activations are red (the one for getcoffee is hidden by the yellow Goal Activation). Finally, there is the Query Intersector "Get Coffee" which is finding the intersection of the "get" and "coffee" intersections, and the Goal Intersector, which finds the path between the Root node and the Goal node.

First, my latest update, in picture form. I have intersectors working, which are integral to the operation of the algorithm. In this picture, only the spherical nodes were created by me. There's the Root node, the yellow action node GetCoffee, and the red concept node Coffee. The rest are generated from the text input "Get coffee". The cubes are Activators - the Root Activator, the Goal Activator, and two Search Activators - one for "Get" and one for "Coffee". The Search Activations are red (the one for getcoffee is hidden by the yellow Goal Activation). Finally, there is the Query Intersector "Get Coffee" which is finding the intersection of the "get" and "coffee" intersections, and the Goal Intersector, which finds the path between the Root node and the Goal node.

Someday :)

Someday :)