I finally got parameters working. The QueryIntersector finds the activated parameters that are unsatisfied, and then assigns them the most activated properties that match the parameter type. What's nice is that the concept nodes start to have a purpose - in this case, matching parameter and property types. The algorithm right now is probably too greedy - I will have to make some adjustments. I'll have to decide if I want to polish it up or work on another feature that will make a nice presentation.

Virtually Embodied Conversational Agent

Thursday, April 21, 2011

Poster Finished!

Short blog post today - but I've finished my poster and Joe hung it up in the lab so you can check it out at your leisure.

Thursday, April 14, 2011

Nothing Big Today

I've mostly been working on implementing parameters, but it's been tougher than I expected. Mostly conceptual problems. My last problem is inheriting values to be filled for parameters based on what the parameter is. For example, a destination should be a position, and so it should take a vector as a value. But we should only have to say that position is a vector, not that a destination has a vector value as well. The next issue is how to store the parameter value only for a particular instance. It's possible that different paths to the same goal would have different values along the way. Do I store each possibility, or just compute the values once I've already decided on a path of action? I'm thinking the latter, but this removes the possibility of finding the best path for a sequence of actions.

I have a Chem exam tomorrow so I haven't been able to do much on this. After tomorrow I can get back to doing serious work on it.

Friday, April 8, 2011

Thinking Out Loud

This post is mostly for me to organize my thoughts, but I thought it might be interesting to share.

One aspect that isn't covered very well in my research is the cross-over from long-term memory to working memory. That is, I've read a lot about general knowledge representations, but much less about instantiating those concepts when it comes to actual objects in the environment. The book "Explorations in Cognition" talks a bit about the difference between the "Mental World" and the "Real World", which is nearly analogous - the difference is that the mental world in that case is what is expected, whereas I treat the "mental world" as common-sense long-term knowledge.

Objects in the environment pose a couple of challenges. First, how do we determine whether they are in the environment? We can have certain senses, but individual senses may not be enough to completely identify an object. For the sake of this project, we can assume that all required information is made immediately available in the small world. But even then, there's an important property of properties - whether they are satisfied or not.

In a knowledge base, an unsatisfied property means that an object has a range of possible values for a particular property. For example, an apple can be red, green, or yellow. Therefore, in our knowledge base, apple has the unsatisfied property of color, with a range of values - red, green, yellow. These properties can be satisfied by specifying the type of apple. We say a Granny Smith IS-A apple, and it satisfies the color property by setting its value to green. We could also specify that we're talking about a "red apple", and that instance would have its property satisfied.

But what if the object that could satisfy a property isn't satisfied itself? Well then the property isn't satisfied, technically. A car is a vehicle that has wheels - that seems to satisfy the method of propulsion (or whatever you want to call it). But we don't know what kind of wheel it is, what it looks like, etc. So we can't give a full representation of what a car is without this extra information.

So say we have a box in our small environment. We know a box is an object, and it satisfies the property of "shape" with the value "cube", and it will have a color that is unique to that instance. The shape property will be connected to the concept node of "box", while the color property will be connected to the instance node of that particular box. If we want to get a property of a particular instance, we first look at the instance itself for the property, then we can move up the conceptual hierarchy (following IS-A edges) to continue looking.

I think I'm going to revise my plan from my last post. Instead of looking for a property that can fulfill the argument and trying to satisfy it immediately, I'm going to find the instance node that corresponds to the object in question. From there, I can run the ArgumentIntersector with an activator at the concept and the property that is required.

I think that's it for now.

Thursday, April 7, 2011

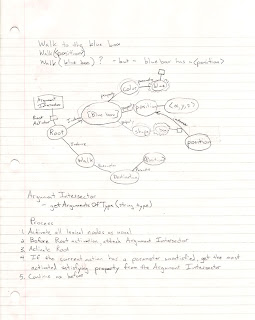

Parameters

The Beta review went well. The main thing I want to get working next is parameters. This will allow the user to say "pick up the blue box", for example. Here, blue box is the parameter for the action "pick up". I've scanned in my current plan with a diagram. It took me a while to come up with the solution, so I haven't had time to implement it yet.

I tried looking into some of the books I have to see how others have dealt with it, but none of the implementations seemed to deal with specifying actions to be done on objects in the environment. They all seem to be focused on a disembodied theory of cognition.

Thursday, March 31, 2011

Actions

My visit to CMU was great, but I've decided on the University of Rochester. Unfortunately I didn't have any time over the weekend to work on this, but I still managed to make some great progress.

My visit to CMU was great, but I've decided on the University of Rochester. Unfortunately I didn't have any time over the weekend to work on this, but I still managed to make some great progress.First, my interface is considerably more robust (although some things will probably break as I add more features). You can save and load scenes without having to worry about losing information. This was kind of tricky because static variables, dictionaries, and hash sets can't be serialized by Unity, which means they won't be saved, and it also means certain things will break when you go from Edit mode to Play mode. I had to do a few workarounds to fix this. You can also delete nodes without messing anything up, but I haven't added a feature to delete edges yet (won't be hard).

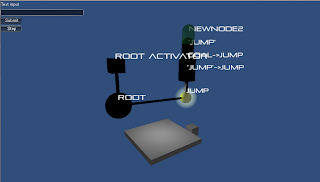

The major breakthrough for this week was getting actions working. You can assign a RigidBody object to the Root node's "Agent" parameter. This means that any actions that get performed in a sequence will be called on that RigidBody. Using this system means you theoretically could have multiple agents responding at the same time, but that would require multiple Root nodes, and I haven't thought of a good way of doing that yet.

The picture shows a block that has just been instructed to "Jump". It's a simple action, but there's a whole lot that went into it. I'll make some more complicated scenarios with different paths for the beta review.

I'm technically a little behind on my schedule, but now since my environments will be much easier to make, it works out that I'm doing ok.

Wednesday, March 23, 2011

Progress Update and Beta Review

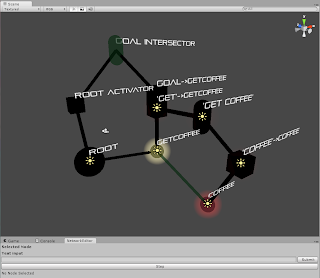

First, my latest update, in picture form. I have intersectors working, which are integral to the operation of the algorithm. In this picture, only the spherical nodes were created by me. There's the Root node, the yellow action node GetCoffee, and the red concept node Coffee. The rest are generated from the text input "Get coffee". The cubes are Activators - the Root Activator, the Goal Activator, and two Search Activators - one for "Get" and one for "Coffee". The Search Activations are red (the one for getcoffee is hidden by the yellow Goal Activation). Finally, there is the Query Intersector "Get Coffee" which is finding the intersection of the "get" and "coffee" intersections, and the Goal Intersector, which finds the path between the Root node and the Goal node.

First, my latest update, in picture form. I have intersectors working, which are integral to the operation of the algorithm. In this picture, only the spherical nodes were created by me. There's the Root node, the yellow action node GetCoffee, and the red concept node Coffee. The rest are generated from the text input "Get coffee". The cubes are Activators - the Root Activator, the Goal Activator, and two Search Activators - one for "Get" and one for "Coffee". The Search Activations are red (the one for getcoffee is hidden by the yellow Goal Activation). Finally, there is the Query Intersector "Get Coffee" which is finding the intersection of the "get" and "coffee" intersections, and the Goal Intersector, which finds the path between the Root node and the Goal node.The "Step" button at the bottom should go through the procedure to get to the goal node, but there's a small bug in it right now. (I would fix it, but I'm leaving for Pittsburgh tomorrow afternoon and might not have time to make this post later).

Now the "Self-Evaluation". I'm really happy to have the Unity interface up to my original back-end progress now. It seemed a little slow, but I think this interface will help me find a lot of bugs more quickly. It has already helped me see some errors. When dealing with graph networks like this, it can be very difficult to catch small things like missing edges. And while it has taken away some time that I would have liked to work on the underlying system, I think it is both an innovative and useful tool for this application - I don't know if I've seen any visualization of cognitive models before, let alone a nice looking one like this.

I'll have to scale back some of my previous goals, but for the Beta review, I plan to have a small test environment connected to the network. A simple case would be a cube that could do something like jump, given a user's input saying "jump". If I have my Beta review on the 1st, then getting this working and making the interface more robust would be my two main goals. (Since I'll be at Carnegie Mellon this weekend I don't foresee getting much work done). If it's on the 4th, I'd like to have a more complicated environment (maybe multiple objects).

By the Final Poster session, I plan to have an environment where an agent has multiple actions available to it, and can do things like "pick up the blue box" or "walk to the green sphere". Being able to take in information from the user and store relationships would be a nice added touch. Other related dates would probably be any cool interesting features I can add. In general, however, I expect the major functionality to be done by the presentation date.

Also, Joe wanted me to submit a paper to IVA 2011, and that deadline is April 26th. I'm not sure how compelling my system can be by that point, but we'll see how it goes. I may focus more on the benefits of a visualization system for a language interface (since the theme of IVA 2011 is language).

Thursday, March 17, 2011

Activations in Unity

Have some progress to show tonight - I've got Activations working in Unity! I had to restructure my code a surprising amount to get this to work. In general, I had to adopt a more component-based approach. For example, instead of having specific Activation groups, I switched to an Activator object that takes a particular Activation (a prefab) as a parameter. The upshot to this is that it's making my structure more modular and visualization-friendly.

Also, I realized that you can't use the virtual and override keywords with Awake and Update - Unity uses their own method searching to call these methods, and if you mark a method as virtual or override, it will skip over it. Very frustrating. If you want to make your Awake or Update function semi-virtual, you can use the "new" keyword, but that method instance will only be called if the method is called from the correct type (not in an array of parent types, for example). Thought this insight might be helpful to anyone else doing Unity coding.

Anyway, here are the results. The cube is the Activator object, connected to two Concept nodes, and the purple Activations are shown after the Activator object was activated.

Subscribe to:

Comments (Atom)